European Championships 2013 was the best dressage I have ever seen. London 2012 took some beating but this did it for me. Charlotte and Valegro set a new standard in the Grand Prix – it was the first time that I have ever thought that judges could simply start with a 10 and then just take away a little for an error here or there and get exactly the right score. Until Helen and Damon Hill went, all the team medals were up for grabs, I was glued to the live streaming. The top riders were all on form, the horses looked powerful, active and unstressed – it doesn’t get any better.

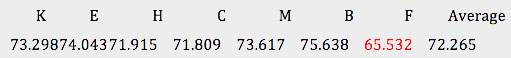

I was shocked when the scores for Michael Eilberg (GBR) and Delphi came up:

The Swedish judge at F had decided that he saw a completely different test to the other 6 judges. Did this affect GBR’s chance of a medal? The answer is yes, it cost GBR gold and instead we got bronze.

How can one judge in 7 make the difference to the medals?

The Team competition was close, with only 0.7% between gold and silver. With 7 judges, for any one judge to make such a difference they would have to be 4% lower than the average. The SWE judge (the Swedish judge) did just that, except he was 7.9% lower. Single handed, he took GBR from gold to bronze.

What went wrong?

What SWE did was simply to award Delphi 0.7 points lower than the average of the other judges 95% of the time. Or another way to cut it, he was the lowest mark 81% of the time.

If you attended a judging course and you were only 0.5 marks out on a particular movement, your adjudicator would not be worried in the slightest. However, when you do it for most movements, it becomes catastrophic.

Pigeonholing

Why would one judge have such a different opinion? So far as I can see from Global Dressage Analytics, this was the first time that SWE had judged Delphi. Could it be as simple as that?

To be candid, yes, that could easily be the whole reason. I have long suspected that judges have to see you several times, discuss you with their colleagues, then they will have a view on what mark you should be getting, i.e. you are pigeonholed, you are given a rough mark that is considered to be your level.

Let’s say you are considered to be a 68% combination, if you have a great ride at the next competition, you can go up 1%, a bad ride, down 1% - if there are clear mistakes such as errors in the changes, then you can go down a bit more. What you will not do, is go from 68% to 78% in one competition.

Deeper Team analysis

Having seen this, I immediately decided to calculate the impact on Team GB’s chances of a medal. I also decided to analyse all the judges to see if I could spot any trends. What I discovered was disturbing.

I re-ran the results with the following changes and see if it made a different to the medals:

- 1. Remove any national bias by taking away the scores from a judge for riders of his/her nationality

- 2. Remove each judge in turn to see what impact they individually had on ranking

- 3. Remove the highest score and the lowest score

- 4. Remove the lowest score

1. Removing national bias

When I removed the marks given for any rider from the same country as the judge (i.e. remove the GBR judge’s marks for any GBR competitor), it made a major difference to the results.:

- GBR moves to gold – up 2 places

- GER moves down to silver – down 1 place

- NED moves down to bronze – down 1 place

In more detail, I looked at which judges may be making this difference, was it all of them or just one or two? In fact, only GER and NED made a difference:

- The German judge:

- promoted the GER team from silver to gold

- demoted NED to silver

- The Dutch judge:

- promoted the NED team from bronze to silver

- demoted the GBR team from silver to bronze

So, do we suffer from nationalistic bias? It would certainly give that appearance.

Conclusion, as we have 7 judges, we should not allow a judge to adjudicate on their own country. This is an easy change to make and we should do it.

2. Remove each judge in turn to see what affect they had individually:

Removing ---> Effect:

- SWE ---> GBR to gold, GER to silver, NED to bronze

- GER ---> NED to gold, GER to silver

- NED ---> NED to bronze, GBR to silver

Conclusion: Moving from 5 to 7 judges can’t make-up for one judge getting it very wrong. Having 7 judges is a good idea at Championships but it doesn’t fix the fundamental issue that the system is inherently inaccurate.

3. Remove the highest and the lowest scores

The effect would be:

- GER – Gold (no change)

- GBR – Silver (+1)

- NED – Bronze (-1)

Conclusion: Superficially, this sounds like a great idea. We take out any judge scoring too high or too low. What it doesn’t do is give the correct result. It doesn’t account for a judge that pushes up their own NF a little and depressing their main competition a little. It only takes out extremes and there are better solutions.

4. Remove the lowest score

This had exactly the same effect as removing the highest and the lowest score. It also suffers from the same problem that it doesn’t improve accuracy.

The system of judging is too complicated

For any movement, there are over 30 errors a judge must evaluate, calculate the deduction that each represents and award a mark. The Dressage Handbook outlines these potential errors but it does not tell you what you should deduct for combinations of these errors. In essence, it is a marvellous treatise as far as it goes but stops short of being a Code of Points. The current system is too complicated for any human to use with the degree of accuracy that modern dressage needs and deserves.

In addition, the current system does nothing to remove the “built-in” biases that exist in all judgemental sports.

Inga Wolframm has described in Eurodressage and at the Global Dressage Forum these biases affect everyone and can’t be removed by simply being aware of them, they are part of human nature. We have certainly seen them at the 2013 European Championships. The most common seen in dressage are:

- Conformity Bias – judges feeling that they have to fit in with their colleagues

- National Bias – scoring one’s own nation higher than the other judges

- Order Bias – the later a rider starts, the better chance they have of a higher mark.

- Memory Bias – remembering the previous marks and awarding marks based on this rather than what was actually seen

Other observations

The award for the judges that reach for the high marks go to:

- Andrew Gardner (GBR) highest mark 34% of the time

- Isabelle Judet (FRA) highest mark 22% of the time

The award for the strictest judges goes to:

- Gustaf Svalling (SWE) lowest mark 32% of the time

- Dietrich Plewa (GER) lowest mark 26% of the time

The award for the most even handed judges, i.e. the ones that were the highest or the lowest in equal measure:

- Leif Törnblad (DEN) 0% highest and lowest in equal measure

- Francis Verbeek (NED) -2%

- Susie Hoevenaars (AUS) -3%

Problem is that they may all have been right, or all wrong. With the current system we can’t know.

What is the solution?

Can you imagine a conversation between a judge and a rider where they discuss why it was a 7.1 rather than a 7.2? Today, this is a fantasy. The theoretical accuracy of our system is ±1.5% per judge. Yet, we need to move to decimal points to make the system sufficiently accurate for modern dressage.

- The system has to be simple enough that normal judges can get it right most of the time and the best judges almost all of the time.

- The system must be transparent so that if there is a doubt about why a mark was given, it is possible to be justified objectively.

- The audience must be able to understand what is going on

- The system must be objective – the rules for gaining or losing points must be clear.

- It must be simple – even though training and riding dressage is far from simple, what we evaluate is not that complex.

Component system

Those of you that have read or heard my views before will know that I am a proponent of separating the judging tasks into manageable chunks.

Other judgemental sports have faced these issues and have come-up with intelligent, workable solutions that involved separating the judging tasks. For dressage, we could adopt a similar approach:

- We have 8 components identified in the Dressage Handbook (Precision, Rhythm, Suppleness, Contact, Impulsion, Straightness, Collection, Submissiveness)

- We have 7 judges; so each of 4 judges could have two of these components. The other 3 could double-up on the more difficult components and do the job of scoring the Artistic elements in the Freestyle.

- Rather than mark each component out of 10, we could give simpler guidelines. For example, with Rhythm the evaluation could be according to the following scale:

- Correct rhythm all the time

- Correct rhythm most of the time

- Correct rhythm some of the time

- Correct rhythm none of the time

- Rules for the combination of the Components would need to be worked out by senior judges, trainer and riders.

One judge could mark both Rhythm and Impulsion as they are very closely related.

This is just one idea, I am sure there are other solutions to this problem and we must explore them.

Order Bias

This is most evident in the Grand Prix where starting early in the class is definitely not going to improve your score. This is easy to fix if we insist that all GP draws will be random and not based on the World Ranking List.

No more “quick fixes”, please!

Designing a new system will not be quick, but we need this to survive as a sport.

Going for quick fixes such as removing the highest score and the lowest, or just the lowest, or removing nationalistic scoring are not solving the problem. They still allow a horse to pigeonholed into a mark and left there. Once the judges decide that your horse is a 63% horse, it is almost impossible to break out. You will never ride down the centre line at Aachen and suddenly have the best ride of your life and get 75%, even if you clearly deserve it. Secondly, the highest or lowest may be right. From their vantage point, the marks they give may be correct. Just being high or low does not necessarily mean you are wrong – what we have to protect for is the judge that is obviously wrong and that is not easy to legislate for.

Our current system has served its purpose well but riders and horses have outgrown its capabilities. It is time that the FEI looks to a fundamental change in the system.

Anonymous judging

There is a strong argument for not showing which judge gave which mark. The argument is that judges will feel more able to mark what they actually say if they don’t have to worry about “conforming” to the scores of the other judges. I would agree with this. However, I would also be concerned that we would lose transparency and accountability and with the problems we have today, this could be ruinous for the sport. To be clear, the judges don’t want this either, they want to be accountable. So, good idea, just not yet.

Showing only average marks and no rankings by judge

Similarly, if we only show the running average score then the audience won’t know that there are big differences between the judges. Advocates also use the argument that the audience should be focusing on the dressage rather than the scoreboard, so in fact, by limiting the information available, they are, in some convoluted way, helping the audience to appreciate dressage.

This is the “deny that it exists enough times” strategy and it magically it will disappear. It doesn’t help with transparency nor accountability. It doesn’t fix the accuracy problem. It cannot be justified.

FEI, please act now!

I would like to be clear that the problem is the system, NOT the judges. Candidly, I think the judges are doing the best that anyone can do with this type of system. It is inadequate for the Technical mark and even worse for the Artistic element of the Freestyle.

The FEI is organising a Freestyle judging trial and this is right for the sport and I fully support any effort to improve accuracy.

It needs to build on the ideas from the 2009 Dressage Task Force and appoint a new task force to examine the problems with the current judging system and recommend a way forward. The IDRC, IDTC and IDOC should each have members on the committee with experts in statistics, video and other artistic sports that have faced the same issues. This is urgent, and it is for the FEI to lead the way.

by Wayne M Channon, Secretary General IDRC

Related Links